Articles

The effects of traffic bursts on network hardware

5 min read

We’ve written extensively about the phenomenon of network microbursts and how to use the iPerf network performance tool to create them in order to test their effects on your network. Our interest in them grew out of our work with Velocimetrics, since microbursts can have pretty significant effects in financial/trade markets.

Our journey down the rabbit-hole got us interested in seeing the effects of microbursts on switches and interfaces in a test network. We wanted to find a switch that would drop traffic when we sent “bursty” traffic through it. We ended up uncovering an even deeper problem.

Our exercise demonstrated how the root cause of packet loss can be very difficult to pin down, and how to use tools like iPerf and packet capture in the right way to find it.

Our initial setup

When experimenting with microbursts, we wanted to set up a simple network with a piece of networking gear (in this case a 100 Mbps switch) to serve as the subject. Part of the setup involved sending traffic from more than one interface to the switch, in order to see if microbursts were having an effect on the switch’s packet buffers. As a matter of convenience, we wanted to use a single machine to run the iPerf client(s), so we added a 1000/100/10 Mbps USB adapter to serve as the second interface.

Using this, we could run two iPerf clients to send traffic bursts through the switch.

Sending traffic bursts in iPerf

To test this, we wanted to start with a burst pacing of 1 second (one million microseconds), which will give us the most “burstiness” coming from iPerf directly. Using the --pacing-timer option in iPerf, we ran the following command from each client:

iPerf3 -c -u -b 100M --pacing-timer 1000000

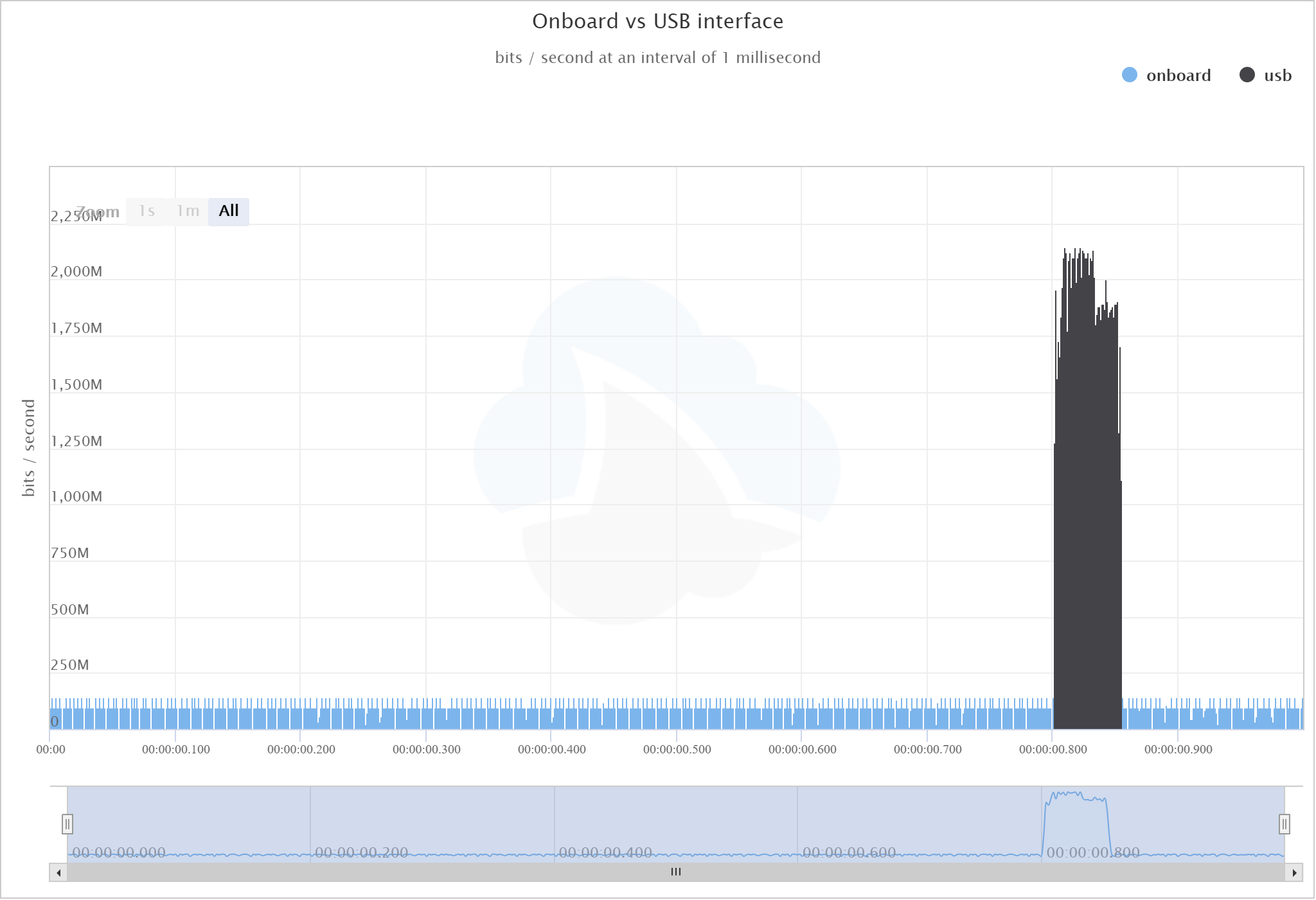

During these tests, we ran tcpdump on both the client and server. Here’s the capture from the client, and a graph of the data sent by each interface:

From this graph you can see that both Network Interface Cards (the internal NIC and the USB dongle) sent data very differently. The NIC sends at a (fairly constant) rate of 100Mb/sec but the USB NIC (suddenly) sent at 1Gb/sec for 100ms and then nothing for the rest of the second - the very definition of a microburst.

Different packet loss rates?

iPerf works by calculating data sent by the client correlated with the data received by the server. While doing the test, however, iPerf’s results showed 90% packet loss of traffic that was sourced through the USB NIC! At first we assumed that the switch was dropping the packets due to the microburst, but thanks to advice from the folks at packet-foo.com, we knew that you can miss a lot of information about root-cause when you are relying on captures that are taken from the source rather than in-line using a TAP.

Using a TAP to find the problem

A TAP (Test Access Point) is a hardware tool for network monitoring. You can think of a TAP as a network probe that sits in-line between the data source and the device you are evaluating for network performance. Using a TAP, rather than capturing on the source machine, lets you see the data as it is seen “on-the-wire” rather than a record of what the Operating System is sending.

In our second test, we sent 10Mb/sec with 1 second pacing:

iPerf3 -c -u -b 10M --pacing-timer 1000000

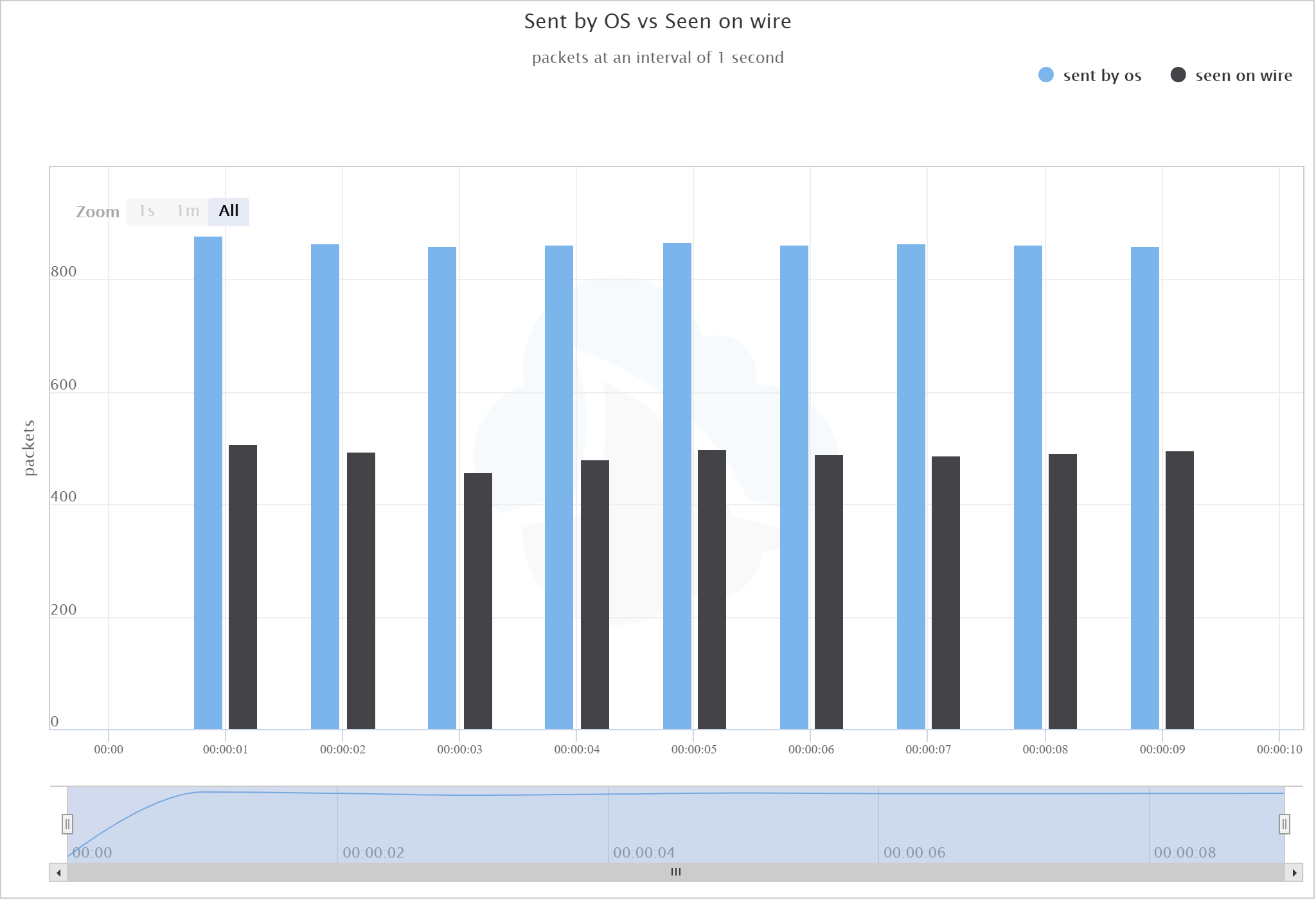

iPerf’s results showed a ~50% packet loss from the client sending traffic through the USB NIC. By taking a packet capture on the TAP, we could see what was actually being sent to the switch. What we found was that not all of the traffic in the capture taken on the host was actually being sent on-the-wire.

To spot the difference, we merged the capture from the OS with the capture done by the TAP. However, we needed a way to differentiate the two. Another effect of capturing directly on the local system is that UDP packet checksums aren’t calculated by the OS directly; the Network Interface does that work. So, if we relied on the fact that the packet captures taken at the OS level would have bad UDP checksums, we could graph the two different captures in one. Here’s a graph of the difference:

The blue bars show packets sent by the os, the black bars show packets seen on wire.

This graph showed us where the packet loss happened. It wasn’t because the switch was dropping packets. It was somewhere before that and due to using this USB Ethernet adapter!

Microbursts as the root cause

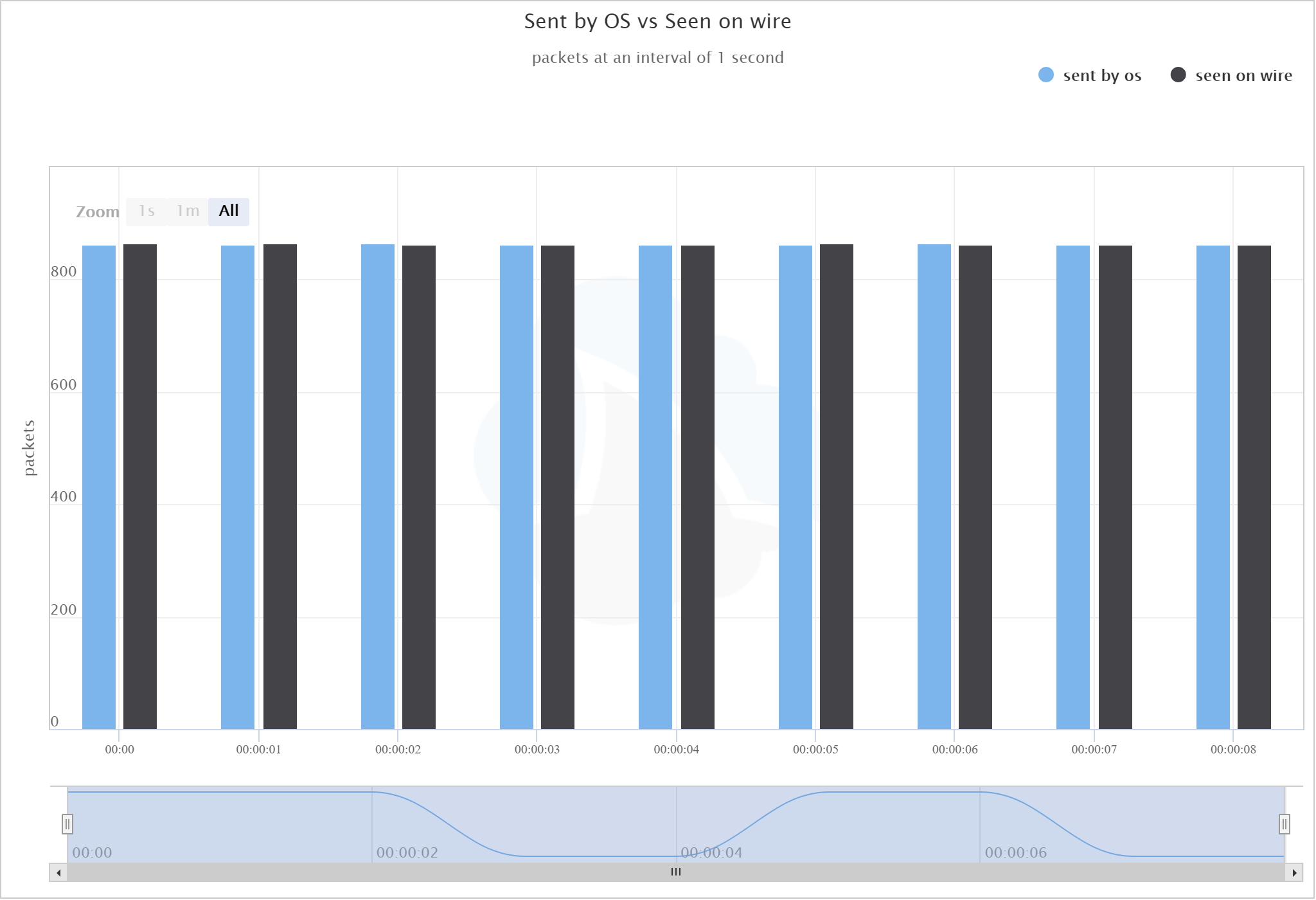

Now that we knew where the loss was happening, we used iPerf again to see if the problem was caused by microbursts. Here we ran the test again with the following command to set the pacing to 1000 microseconds:

iPerf3 -c -u -b 10M --pacing-timer 1000

This “smoothed out” the rate at which the traffic is sent, so those drastic bursts didn’t occur. When we compared the OS capture vs. the on-the-wire capture after this test, we can see that the loss was mitigated:

Again, the blue bars show packets sent by the os, the black bars show packets seen on wire.

What are we learning here?

People tend to blame the network when things are slow or poorly performing. In this case, the problem arose before even leaving the source - possibly a USB issue, a driver problem, a Mac OS bug, or problems with the hardware itself. Microbursts are tricky and it’s very difficult to nail down exactly where they are causing a problem.

Consequently, this is why “they” tell you not to run servers behind a low-cost USB interface. What would happen if this were a web server pushing lots of traffic?

Additionally, it’s important when doing this type of network investigation that you take your captures from the right location; capturing directly from your local host will give you different results than those seen by the network itself.

We’re still on a mission to find a switch that will drop bursty traffic. We went in search of a hypothesis, and came up with something totally different. Such is the way with packets! We’ll keep hunting - stay tuned.

Want articles like this delivered right to your inbox?

No spam, just good networking